A few years ago, customers began telling us they were building more serious and commercially sensitive applications in JavaScript, and asking if we had a solution for protecting them, similar to DashO for Java and Android, and Dotfuscator for .NET. As the frequency of those questions increased, we decided it was time to provide the same level of protection for JavaScript that we do for Java and .NET. So in the second half of 2018, we listed a few options for providing such a product. We examined building the product on top of an existing open source package versus creating a new product from scratch. While we had nearly twenty years of experience with byte-code protection from developing DashO and Dotfuscator, we knew that protecting and obfuscating source code might require a different approach. But after much research and prototyping, we decided that extensive experience in the Java and .NET space put us in a unique position to design and develop a new JavaScript protection product from the ground up.

In this post, we will share a few experiences we learned during the making of JSDefender.

Our engineering team did not want to reinvent the wheel. We meticulously examined the available popular open-source JavaScript obfuscation frameworks, because our initial goal was to support and contribute to an existing solution. We planned to fork it and add the required features that make up a supportable, professional-grade product.

As the weeks went on, we became more and more disappointed. We found only a few well-maintained projects, and the majority of them had issues that did not meet our quality expectations.

The most promising project was JavaScript Obfuscator by Timofey Kachalov. When we first evaluated this project, Timofey — mainly because he no longer had time to invest — was about to pass the ownership to anyone who would undertake it. The project had several dozen open issues at that time, and a large portion of them had been open for several months. We were seriously thinking of taking the ownership of JavaScript Obfuscator, as Timofey did an excellent job.

Our main concerns were:

By this time in our evaluation process, we had become very familiar with the nature of JavaScript obfuscation, and had built out some small prototypes to test our ideas. We decided the best path forward was to develop a new product from scratch.

What programming language do you use if you want to develop a tool for protecting JavaScript? Of course, JavaScript or TypeScript.

Nonetheless, we started with C#, which seems counterintuitive. A person on our engineering team had years of experience with using parsers and transforming syntax trees. So, he created a simple prototype in C# that parsed and obfuscated JavaScript code. Our former skills with byte-code obfuscation taught us to take care of the protected code’s performance. We used the C# prototype to measure the performance degradation consequences of particular obfuscation transformations.

At first sight, creating a C# proof-of-concept, knowing in advance that we would eventually move to TypeScript, seems to be an excessive waste. However, we gained weeks during product development with the opportunity to test our concepts and ideas in an early phase. While experimenting with various JavaScript protection transforms with the C# tool, the team prepared to start developing the eventual product in TypeScript. We learned many things from creating the C# tool (mainly some critical elements of the JavaScript source code tree transformations) that were extremely useful when we moved to TypeScript.

Let me mention just one of them.

We decided to use the Babel toolset to work with the input JavaScript code. Babel has an agile community behind it and provides all the functionality for parsing and analyzing the source code. As the ECMAScript standard evolves, we can be sure that the Babel community will follow it eagerly. The experience with the C# prototype showed us that we could utilize about a dozen Babel services for protecting the JavaScript code. But we also learned that building on them would slow down the engine, as we would piggyback concrete transformation routines on services designed for more general purposes. Our final decision was to use only the Babel parser and write the transformation code we needed from scratch. The result of this decision was a faster tool with higher confidence that we can easily control the implementation. And, of course, now we have just minimum dependency on other packages.

About 15 months later, as we are preparing to release JSDefender v2, we still believe we made the right decision. After the continuous refactoring from the start of the project, we have clean code and low technical debt. We’re rarely affected by security fixes in other packages (so far, almost all of them have been in packages that we use in our build process).

Every conversation we have about JavaScript obfuscation starts with an observation that even well protected code can eventually be reverse-engineered. If a hacker has the knowledge, the tools, and the time, you cannot create a program that is completely resilient to decrypting, deobfuscating, and understanding. However, with the right protection, you can significantly increase the time required to discover how a particular piece of code works. Instead of minutes, attackers take hours. Instead of hours, attackers take days or even months.

As humans, we prefer to read straightforward code with many clues such as comments, intuitive identifiers, self-describing method names, well-organized control flow, and many others. Everything that adds semantics to the code helps our understanding. On the contrary, things that remove semantics hinder us from getting acquainted with the details and intent of a program.

Though there are only a few JavaScript protection tools available, all of them build on the same approach. They strive to remove as much semantics from the source code as possible to prevent human reading and reverse-engineering.

By its nature, JavaScript is a peculiar programming language. Its structure offers about two dozen transformations that help to remove semantics. They range in complexity from simple to very sophisticated. When you opt to implement a specific transformation to change JavaScript source code, you need to answer a few questions:

While developing JSDefender, we examined several competing products, like JScrambler, Nw.js, obfuscator.io (the online version of the JavaScript Obfuscator tool on Github), and a few others, to determine the top feature set we wanted to implement in our product. Though the team was tempted to implement all the transformations, we made decisions using the 80-20-rule. We carefully selected about a dozen of them to provide the most efficient and effective protection in the time that we had.

The 80-20-rule guides us in another way. When measuring the performance consequences of particular obfuscation techniques, we observed that the overall performance gets orders of magnitudes better if we use partial protection. In other words, if performance is a concern, JSDefender can protect just the parts (“20 percent”) of the code that contain significant intellectual property or are otherwise sensitive to attack, while leaving the remaining code (“80 percent”) intact. The ultimate balance is up to you. This is why we designed JSDefender with highly granular configuration and partial protection in mind from the start, so when we create a new protection technique or modify an existing one, we do not need to focus on the partial protection aspect. When we implement the algorithm of the transformation, the engine takes care of managing which parts of the input code to transform, and which parts to leave alone.

Protecting the JavaScript code is more than just obfuscating source files. Many development teams use JavaScript UI frameworks that have their peculiarities. Bundlers (such as Webpack, Metro, Rollup, Expo, and others) combine JavaScript modules into several web chunks. There are a few special techniques that can add extra value to protect bundles. We continuously examine such opportunities and add corresponding features to JSDefender.

One of the most significant arguments for building a new protection engine was our concern about the uncertainties of open-source JavaScript obfuscation frameworks. It was not just a question of “does the code work well” (and the related questions around measuring that!). The real question was how it fits into our overall quality expectations and approach to development.

The JSDefender team believes that excellent software can be delivered only by keeping the technical debt at a very low level. Automated testing, continuous refactoring, code reviews and pair programming, and regular in-team knowledge sharing is a part of our everyday work.

When examining the source code of several open-source projects, though we acknowledge the excellent quality of some of them, we did not feel that we would be confident enough to apply our best practices and approach with those particular projects.

Let me tell you a story about testing, when we were building the C# proof-of-concept.

Originally, we intended it to be an inexpensive MVP (Minimum Viable Product) for JavaScript code protection. As we had worked with dozens of MVPs earlier, we thought we could create simple code with just the bare minimum of (automated) tests. After all, we planned to throw away the MVP after the demos and first rounds of feedback. Then we would start coding again on more solid grounds to keep the technical debt low!

But right on the second day of working on the MVP, it became clear that JavaScript obfuscation is not a context where you can postpone automated tests for a later phase. Without having the confidence that we were managing the parsed syntax tree the right way, we were unable to produce useful demos as quickly and reliably as we wanted! By the end of the first week, we created about 300 automated test cases. When we demoed the MVP the first time, we had over 600 of them.

From an external point of view, you may think that using C# and then moving to TypeScript later was an unnecessary waste of resources. The team had to port not only the actual code but also the test cases to the new programming language. We saw it differently. With the C# prototype, we learned two important things that were extremely useful by the time we wrote the first TypeScript code lines:

Because of these lessons, creating the TypeScript code base — I deliberately do not say “porting” — took less than 20 man-days.

In a couple of weeks, the number of our automatic test cases will exceed 10 thousand.

Size matters. We knew that to test the correctness of obfuscation transformations with simple unit tests over short JavaScript code was not enough. We used a few different approaches to carry out protection tests on real programs. Let me tell you about three of them.

First, we use dogfooding. The first enormous test is JSDefender itself — one of the last steps in our build-chain is JSDefender protecting its source with itself. We use Webpack, and the code contains an internal Webpack plugin that uses the current build of the source to obfuscate the current build. If we have a bug, there’s a high likelihood that JSDefender will fail as we try to run it.

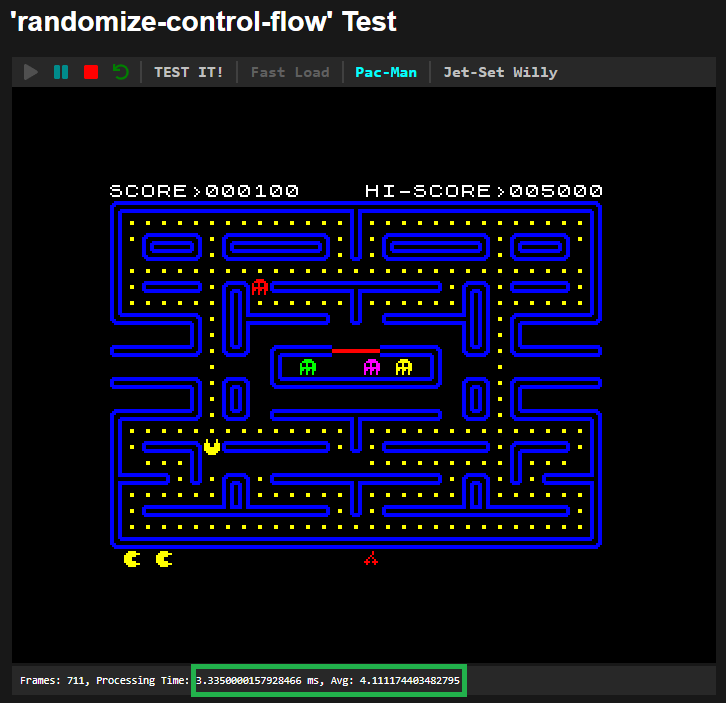

Second, we have a few large JavaScript files that run in the browser. We protect them in dozens of ways and test how they behave after that in the browser. We instrumented some of these files with analytics that help us measure performance degradation. One of our engineers is a fan of vintage computers. He created a JavaScript version of a ZX Spectrum emulator. (Did you know that this was the microcomputer of the 1980s that Satya Nadella used to learn computer programming?)

The code is about 1.5 Mbytes. It runs tests in about 40 seconds and measures a few things. The first figure shows a successful test case with low performance degradation, while the second indicates too high performance penalty:

Third, we used real website tests with a few partners. One of them was scrummate.com (we use their tool for managing the JSDefender team’s work). With their permission, we downloaded a few core JavaScript files of the website (a few Mbytes in size) and protected them. Then, with the help of a plugin in the browser, we redirected the requests for the original JavaScript files to the protected ones hosted on the test machine. This way, we could examine their behavior.

We live in the era of cloud services, so offering a JavaScript protection service in the cloud seems like a good idea. In fact, we designed and implemented JSDefender to run either on-premises or in the cloud. For example, our free Online JSDefender Demouses the same protection engine as the on-premises JSDefender. Because it lacks good build integration and does not implement all the protection transforms, you would not want to use it for a real project, but we think it is a great way to quickly get a sense of how JSDefender protects JavaScript.

Several other JavaScript protection products (including commercial-grade offerings) are primarily cloud services. This means you are required to send your unprotected code off site, so the service can read, process (and even store) your sensitive code before returning the protected version. This presents an extra layer of risk compared to an on-premises solution, and requires you to trust that the service provider:

Engineering and development teams occasionally make mistakes — just as our team does. What if such an issue leads to the leaking of your unprotected code? Simply put, we know that many of our customers would rather not send their unprotected code to a service hosted somewhere beyond their control, run by entities they may not fully trust.

So, while understanding and admitting that there are reasonable cases for using an online code protection service, we emphasize the on-premises usage of JSDefender. Even if our engineering team makes mistakes, the impact of a potential bug is significantly less than in the online case, since our customers still have their infrastructure, policies, and controls in place to prevent sensitive code from leaving their premises.

You can get started with JSDefender by downloading a fully functional, time-limited trial copy. Trials come with full product support.

JSDefender is available as part of a PreEmptive Protection Team, Group, or Enterprise license and IS FREE to current subscribers at those levels. Standalone pricing is also available. To find out more, request a quote.

Our Online Demo version of JSDefender is also available with no sign-up needed!