Of course, everyone is “for security” in principle. The hard question that each organization has to answer for themselves is “how much is enough?” Over-engineering is (by definition) excessive, and over-engineering application security can, in fact, be devastating as overly-complex algorithms, architectures and processes can compromise user experience, degrade performance and slow development velocity. On the other hand, punishment is swift for organizations that cut corners and do not effectively secure their applications, their data and, most importantly their users and business stakeholders. Finding and maintaining that balance can be time consuming and, because you can never be sure you’ve gotten it exactly right, it can also be a thankless job.

Given all of this, you can almost forgive development organizations when they are seduced into the magical thinking of “trusted computing.” Note, do not conflate magical trusted computing with Trusted Execution Environments (TEE) and its components/derivatives. The latter defines a runtime in which applications can be securely executed, while the “magical” variety offers a safe haven where bad (or clumsy) actors simply do not exist. It is a utopian dream-place where only good actors have access.

This slippery slope begins innocently enough with a well-intentioned desire to avoid over-engineering security controls whenever and wherever possible, focusing primarily on the untrusted environments. In an effort to avoid appearing tone-deaf to the underlying business objectives, analysts, architects and development organizations often frame application security requirements within a worldview that sees untrusted systems as the exception and not the rule.

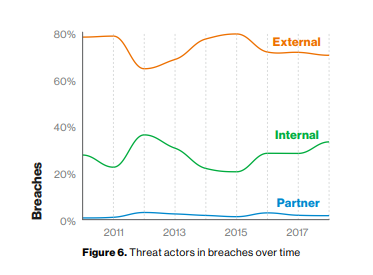

In Verizon’s 2019 Data Breach Investigations Report, nearly 40% of breaches were assigned to internal threat actors – and it’s probably worse than that.

The threat of malicious insiders to organizational security has historically been one of the most difficult challenges to address. Insiders often attack using authorized access and with behavior very difficult to distinguish from normal activities. This doesn’t even address scenarios where non-malicious, or unintentional, insiders are fooled by external attackers.

Further, organizations suffering insider attacks have always been reluctant to share data about those attacks publicly. While numerous regulations are imposing disclosure requirements for data loss (with the GDPR being among the most draconian), there are no such obligations tied narrowly to application exploits (unless they – as they often do –lead to subsequent data loss). Intellectual Property loss does not fall under that rubric.

The following potential threat actor personas are divided into “insiders” and “outsiders” – and depending on the specific business and the specific applications – this list may be shorter or longer.

| Insiders | Outsiders |

|---|---|

| Employees | Professional Hackers |

| Contractors | Competitors |

| Vendors | Organized Crime |

| Business Partners | Non-professional hackers |

| Hacktivists | |

| Nation state intelligence/military | |

| Malware authors |

The central point here is that, even with limited public data, there is simply no evidence to suggest that any organization has been able to effectively establish and maintain an application-safe-haven able to exclude threat actors (ironically perhaps this is why there is so much interest in commercial TEE).

Returning to the central theme – how can an organization most effectively (efficiently) find that balance between security and productivity? Trust-level must be viewed as a continuum not as a binary state. One end of this continuum might include running applications inside Trusted Execution Environments, but that is simply not feasible for all but a very narrow slice of today’s application deployment scenarios.

Looking to the most closely related (and, in fact, inseparable) domain of information security, there is certainly a paradox (if not an outright contradiction) between the guidance assigned to sensitive data versus sensitive code. There is near-universal agreement that, at a minimum, information at rest must be encrypted at all times and in all systems. HIPAA, FISMA, GDPR, and 23 NYCRR 500 are just a tiny sampling of the growing body of information security requirements that mandate encryption of sensitive data (PII, etc.).

If you were looking for more evidence that there is no safe haven “inside” an organization, ask yourself why would PII need to be encrypted when it is safely at rest inside a well-run, secure organization?

If an application accesses sensitive data or IS sensitive data (e.g. Intellectual Property, etc.), one would logically conclude that there should be controls in place commensurate (proportionate, analogous) with those in place for the associated data.

As with traditional information, mapping the lifecycle of an application is a fundamental step in measuring the potential for vulnerability exploitation (which, in some percentage of those cases, leads to an actual loss of some sort).

If computing trust is a continuum, where do your applications fall?

While this does not paint the entire picture, measuring the number of users (within a fixed timeframe) in each of these cells and assigning an appropriate multiplier for your scenarios offers a perspective on the likelihood of an incident occurring. 10,000 unverified users accessing an application on an unmanaged device in across multiple countries should be far more concerning than a single, non-privileged employee running on an entirely managed platform.

| Privileged | Non-privileged | Managed network | Managed device | Un-managed (by you) network | Un-managed (by you) device | Geographical distribution |

|---|---|---|---|---|---|---|

| Employee | ||||||

| Contractor | ||||||

| Partner | ||||||

| Client | ||||||

| Unverified |

Key points to keep in mind when settling out on an application security/risk management journey:

There is no magical “happy place” where protecting the Confidentiality of your software, maintaining the Integrity of your software, and controlling Access to your software no longer needs tending. Certainly, there can be a spectrum of application scenarios where the likelihood of a vulnerability exploit and the materiality of the resulting primary and secondary losses can vary widely, but there is no magical happy place where you can take off your thinking cap and ignore what is now a fundamental pillar of every application development project.

Application security cannot be managed as a silo. Whatever the strategy, it should be consistent with corresponding information security policies and practices. This consistency should include a review of any regulatory or statutory information privacy and security obligations that your organization may be subject to.

Risk can be shared, but not transferred (from a technological perspective).. Cloud providers, third party platforms, networks, and devices can simplify (or complicate) your obligations – but they can never relieve you of those obligations.

This is a journey – like every other flavor of security and risk management. No matter how appropriate your assumptions may be at the time that you make them – you must revisit them periodically. How often? Probably about as often as you revisit your information security and privacy policies.